Teaching AI to Say “I Don’t Know”

A Story About ECGs, Deep Learning, and the Power of Uncertainty

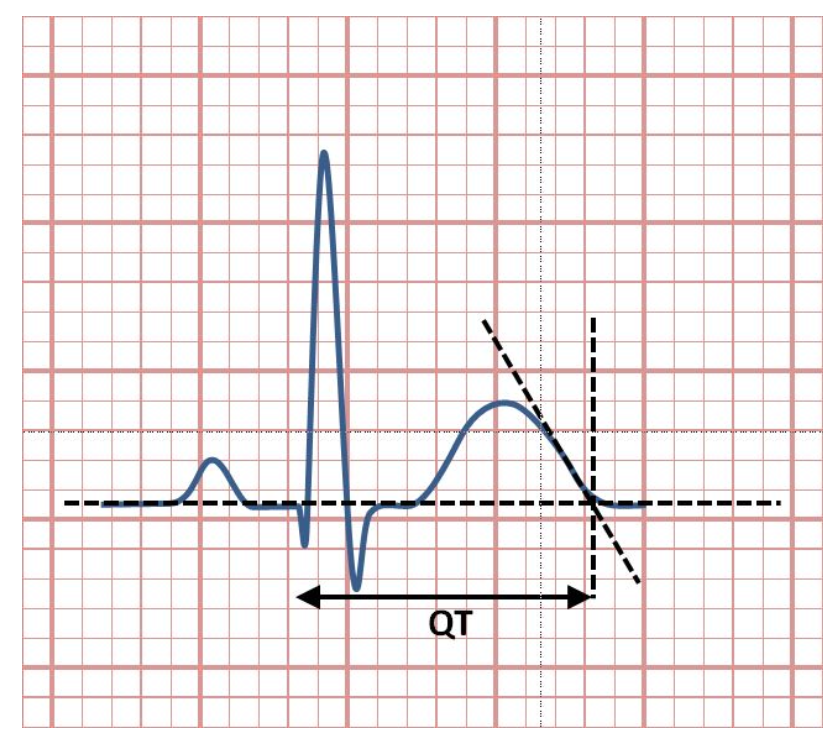

In the early days of cardiology, long before wearable monitors and deep learning, physicians would pore over ECG printouts like detectives at a crime scene. Somewhere in the peaks and troughs of the heartbeat waveform, between the Q and the T, lay a critical clue. A subtle signal that hinted at a heart in distress, or one in perfect rhythm.

This was the QT interval, a measure of how long it takes for the heart’s ventricles to reset between beats. Too long, and the heart might falter. Too short, and it might misfire. It’s a small measurement with big implications.

Fast forward to today, and algorithms, not just doctors, are reading those waves. But here’s the catch. Most of those algorithms read with unshakable confidence. They always have an answer, even when they probably shouldn’t.

At PulseAI, we thought, what if the smartest thing an AI could do was simply stay quiet?

Figure 1. The QT interval measured from the Electrocardiogram.

The Confidence Problem

We live in a world that rewards decisiveness. Fast decisions. Strong convictions. Clear answers.

That works, until it doesn’t. Especially in medicine.

Today’s ECG analysis tools, powered by deep learning, are remarkably good at spitting out numbers. QT interval: 432 milliseconds. But what’s missing is nuance. Doubt. The possibility that the signal was noisy. The lead was loose. Or maybe, just maybe, the model had never seen anything quite like that ECG before.

The problem isn’t that the AI gets it wrong. The problem is that it never tells us when it might be wrong.

And so, we asked a new question.

What if we trained our model not just to predict, but to be uncertain?

Building a Model That Doubts

At PulseAI, we designed a deep learning model that looks at ECGs from a full 12-lead setup, or even just a single lead, and not only predicts the QT interval, but also tells us how confident it is.

Technically, we used something called InceptionTime, a model architecture built for sequential data like ECG signals. But the real breakthrough wasn’t the architecture. It was what we added on top of it.

We trained the model to capture two forms of uncertainty:

Aleatoric uncertainty: the noise that comes from the world itself, like fuzzy signals or motion artifacts.

Epistemic uncertainty: the doubt that lives inside the model, caused by unfamiliar patterns or limited training data.

It’s a bit like teaching a medical student to say, “I think the QT is 430 milliseconds, but I’d like someone to double-check.”

What We Found

We tested our model across a wide range of ECG formats, from standard hospital-grade 12-lead recordings to 6-lead and single-lead signals you might get from a smartwatch [1].

And the results were clear.

On 12-lead ECGs, the model’s predictions were highly accurate, within 8 milliseconds of expert consensus on average.

As we reduced the number of leads, the uncertainty naturally increased. But critically, the model told us that.

Across all cases, our uncertainty predictions were well calibrated. They matched the actual errors observed in the real world.

In other words, the model knew when it didn’t know.

Why This Matters

Let’s go back to that hospital corridor. A doctor is glancing at a screen showing a QT interval. If it’s borderline long, do they act? Do they wait? Do they retest?

Now imagine the AI quietly says, “This reading is uncertain. There’s noise in the signal.” Or, “This ECG looks unlike anything I’ve seen.”

That moment of hesitation can trigger a second look, a recheck, or a more cautious approach. It can save time, prevent unnecessary panic, or even flag a high-risk case that might otherwise be missed.

Because uncertainty is not weakness. In medicine, it’s wisdom.

The Bigger Picture

We’re entering a new era of AI in healthcare. But if that AI is going to sit beside doctors, influence decisions, or even replace parts of the diagnostic process, it needs more than just accuracy.

It needs humility.

Our work at PulseAI is about building AI that doesn’t just give answers. It gives honest answers. Sometimes that means saying, “Yes, this looks normal.” Sometimes it means saying, “There’s risk here.”

And sometimes, the most powerful thing it can say is,

“I’m not sure.”

The Takeaway

Trust doesn’t come from perfection. It comes from honesty, transparency, and knowing your limits.

In building uncertainty-aware AI for QT interval measurement, we’re not just improving ECG interpretation. We’re laying the groundwork for a new kind of medical intelligence. One that informs, supports, and knows when to step back.

After all, the best clinicians don’t always know the answer.

But they always know when to ask for help.

Shouldn’t our machines do the same?

References

[1] Doggart P, Kennedy A, Bond R, Finlay D. Uncertainty Calibrated Deep Regression for QT Interval Measurement in Reduced Lead Set ECGs. In2024 35th Irish Signals and Systems Conference (ISSC) 2024 Jun 13 (pp. 1-6). IEEE.